Building a Home Lab Server in 2022

Why

I have been using various embedded and consumer computers to run both short and long term tasks, like PC Engines, Raspberry Pi, NUC and similar small desktops. They are fine (and very silent) but it is obviously limiting what can be done and there is always a need to re-install OS etc. Because these computers are usually not powerful enough and have no remote management capability, running a virtualization solution on them does not make much sense for me. So to overcome these issues, I finally decided to build a reasonably powerful proper server to use at home.

A powerful consumer computer (such as 8-16 cores, 64GB+ RAM) is a solution, but this usually does not have remote management capability, have limited number of PCIe lanes, lack ECC support and in general not optimized for stability. It might still be OK, but I decided to take the enterprise route.

So my purpose is to have a reasonably powerful “proper” virtualization server.

It is common to purchase servers from well-known vendors like HP and Dell. The remote management capability is usually developed in-house by these manufacturers and they optimize the servers according to particular enteprise needs and for datacenters. However, this is not optimum in my case, because I will run it inside the home, so it has to be maximally silent which is not a concern for a datacenter. Also, not surprisingly, these servers are pretty expensive if you go for high performance options.

Budget

Initially I set a budget up to 2500 USD for an entry level simple server with consumer grade components, but then increased this to 4500 USD to have a proper server not just a powerful consumer computer.

It is not very straight-forward to compare this to an HP or Dell server since it is difficult to make the same configuration. Usually such servers are either less powerful (less cores, no NVMe) for 1 CPU systems or they are too powerful (>16 cores and 2 CPU), and most configurations are only available as rack not tower. Not the CPU but I think the RAM and the storage prices are much higher in ready to buy servers, so a similar configuration would proably be more than 6K USD.

If consumer components (Ryzen 9 5950X, X570 mainboard without remote management, no ECC memory) are used, the cost can be decreased approx. 1500 USD to less than 3000 USD.

Bill of Materials

| Component | Model | ~Price (USD) |

|---|---|---|

| CPU | AMD EPYC 7313P | 1000 |

| Mainboard | Supermicro H12SSL-NT | 700 |

| RAM | 8x Micron DDR4 RDIMM 16GB*** | 950 |

| Storage | 2x Samsung PM9A3 1920GB | 760 |

| GPU* | — | 0 |

| Network** | — | 0 |

| Case | Corsair 275R Airflow*** | 85 |

| Power Supply | Seasonic Prime TX-1000 | 340 |

| CPU Fan | Noctua NH-U14S | 110 |

| Front Fan | Noctua NF-A14 | 70 |

| Top&Rear Fan | 2x Noctua NF-S12A | 40 |

Total: ~4100 USD

*: Remote management controller has a VGA controller, I am not using any other GPU at the moment.

**: I am using the onboard 10G LAN ports but also see updates.

***: This is changed, see updates.

Components

CPU: AMD EPYC 7313P

A very high-end desktop CPU (12-16 cores), Intel i7, i9 or AMD Ryzen, is actually enough in terms of computational power. However, these usually do not support ECC (or you have to very carefully match a particular CPU with a particular mainboard etc.) and their mainboards do not have remote management options. For this build, remote management is a must, ECC is probably a must.

EPYC 7313P

There are so many Xeon and EPYC products, and it is hard to choose. I selected AMD EPYC 7313P, because:

- It is available. Many of these server CPUs are not in stock so actually impossible to purchase.

- A mainboard with the features I am looking for and supporting this CPU is available.

- It has 16 cores. I was actually planning to build a server with 8 or 12 cores (and having a desktop CPU), but then decided to build one that would last much longer and consolidate probably everything I would ever need for home use and for personal projects.

- It has many PCIe lanes.

- In case I need more than 16 cores in the feature, there are up to 64 cores EPYC 7003 series CPUs available (but naturally quite expensive at the moment).

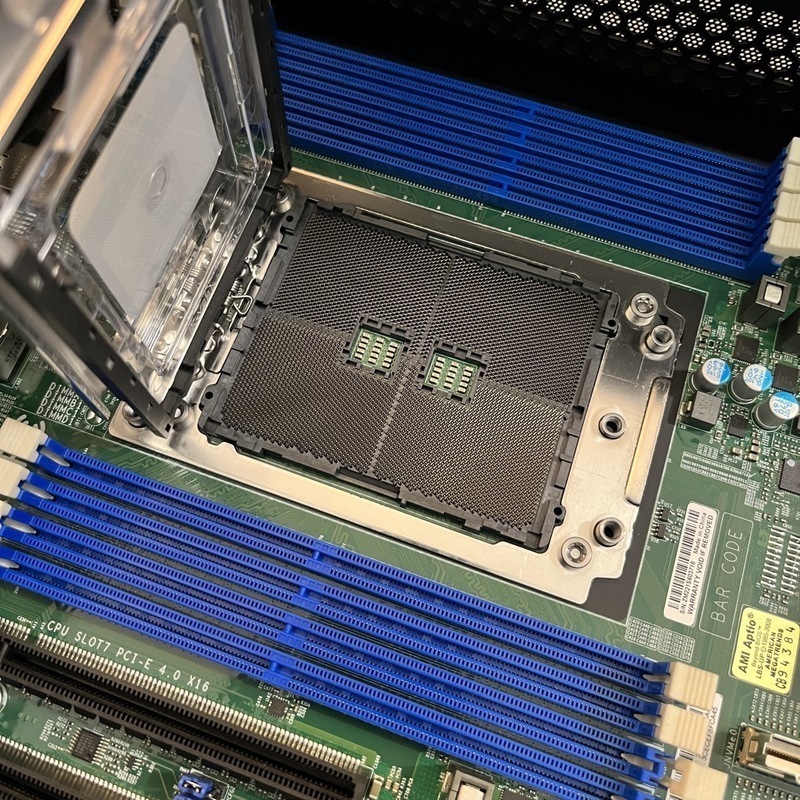

Mainboard: Supermicro H12SSL-NT

One problem with EPYC is it seems there are only a few mainboard options comparing to Xeon, particularly for EPYC 7003 series. Two product lines are ASRock ROMED8 and Supermicro H12SSL. They are very similar and each has a few variants. Because of availability and I do not plan to use an onboard storage controller (for SAS and RAID), I purchased a Supermicro H12SSL-NT. It has 8x DIMM slots, 2x M.2 PCIe 4.0 x4 slots, and many PCIe slots and remote management, so everything I need. I already have and plan to install 10Gb network adapters, so H12SSL-i might have worked as well, but it was not available to purchase.

SP3 Socket on H12SSL

I did not list it in the Bill of Materials, but I also installed a TPM 2.0 module (Supermicro AOM-TPM-9665V).

RAM: 8x Micron DDR4 RDIMM 16GB MTA9ASF2G72PZ-3G2B1

Quite an expensive item in the build because there are 8 of them. This is one of the parts recommended by Micron to be used on H12SSL-NT. EPYC is quite unique that it has 8 (!?) memory controllers, so you get the best out of the CPU when you populate all 8 DIMMs. Initially, I considered purchasing 8x 8GB for a total of 64GB, then decided to get 4x 16GB and add 4 more in the future when needed. Then I decided to install all 8 now.

Storage: 2x Samsung PM9A3 1920GB

I first purchased a good consumer unit, Samsung 980 Pro. Then, I realized this one (PM9A3), an enterprise unit, is priced almost the same. It is a bit slower than 980 Pro, but has at least 3x more endurance.

Supermicro lists only Samsung PM983 in the compatibility list, but it seems PM9A3 works fine as well.

I actually purchased only one first, as I was not sure what kind of main storage I want to use (I was considering iSCSI). Then I decided to use Proxmox VE, and use ZFS, so I purchased another one to create a ZFS mirror pool.

PM9A3 comes without a heatsink. I do not know if there is any need. I purchased an ICY BOX IB-M2HS-1001 heatsink and using them.

GPU

I actually initally installed a GPU but then decided to keep the GPU on my PC, so I did not install any other video card at the moment.

Network

Update: I still use the onboard network ports but also installed extra NICs, see updates.

The mainboard has 2x 10G network ports provided by a Broadcom chip. I was initially planning to use another network adapter, but then decided to use only the onboard one.

Case: Corsair 275R Airflow

Update: Because I decided to move my NAS into this server, the case has changed, see updates.

I do not care much about the case. I do not need any 5.25" or 3.5" support, and I only care if it has a reasonably open design. It seemed this model fits my requirements, and it is not expensive. It supports 165mm CPU cooler height, 140mm fan at front and 120mm fan at the back. It also supports 140mm fans at top but over the RAM slots only 120mm can be used with the RAM modules I am using. It is a bit large, but it is the minimum size I could find supporting these cooling options. It has a glass side panel, unnecessary but a nice touch. The case also has a magnetic dust filter on top and at front, which is a nice idea.

Power Supply: Seasonic Prime TX-1000

I do not know much about the power supply brands, but it seems Seasonic is a well-known brand. This particular power supply is also a bit expensive comparing to alternatives. 1000W might be a bit too much but running power supply with a minimum possible load is a good thing. I calculated the maximum power the server may consume (using a reasonable GPU) is a little over 500W.

This power supply has a hybrid mode, meaning it can fully stop its fan when possible when it is mounted ventilation side on top.

Fans: Noctua

Corsair case comes with 3 non-PWM 120mm fans. As I want the computer to be silent as possible, I removed these, and installed a Noctua 140mm at the front (I already had this, no particular reason I use 140mm), and a Noctua 120mm at the rear and also on top. For the CPU, I installed a Noctua NH-U14S.

NH-U14S is supported by the mainboard, but only in horizontal (fans blowing to mainboard top) orientation.

Installation

I acquired most of the parts pretty quickly. The mainboard, M.2 storage and RAMs were not in stock, but they arrived in less than a week. Because I purchased the second M.2 and the second 4x set of RAMs later, it took more than two weeks to finalize the build.

During installation, I removed the 3.5" cage and the front panel audio cable from the case as I will not use these. Other than the issue with one of the screw locations in the mainboard, the installation was straight-forward.

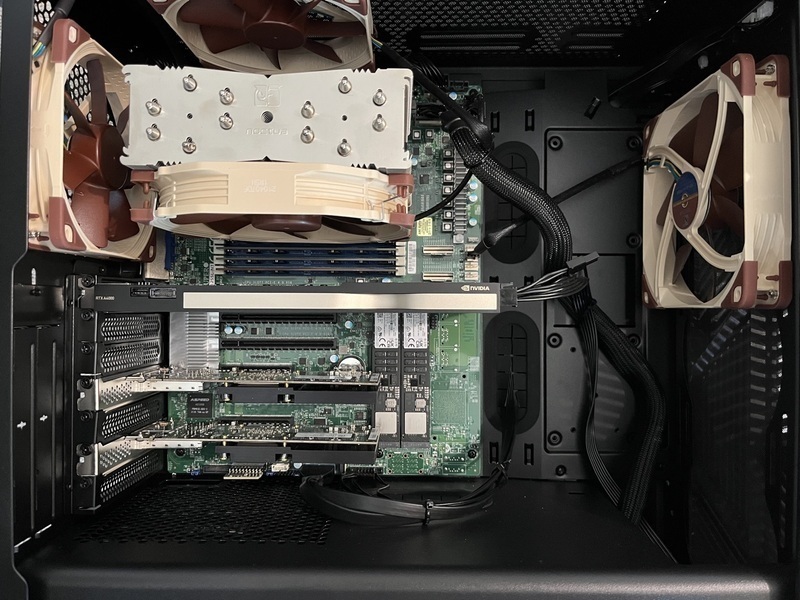

Home Lab Server 2022 with a GPU and 2x NIC installed. However, I decided to remove the GPU and use the onboard LANs only.

Issues and Concerns

The mainboard has one screw location at a non-ATX standard complaint location so I had to take the stand off from the case and left this location empty. That is strange.

The heatsink of I think 10G network IC on the mainboard concerns me a little. It is next to PCI slots at the back. It is not (space) limiting anything but having a heat source there does not make me happy. This might be a reason to prefer H12SSL-i rather than -NT (or -C instead of -CT). Because it does not have 10G ports, H12SSL-i (and -C) has no heatsink at that location.

The first PCIe slot (PCIe Slot 1 x16) in H12SSL is very close to the (front) USB header connector. I do not think it can be used safely with a PCIe x16 card having heatsink.

The design of the case makes it a bit harder to unplug network cable from the management/IPMI port of the mainboard. As this will not be done often, not an important issue.

Supermicro does not provide a customizable fan control. I hope that is not going to be too big issue by using quiet fans, otherwise I need to find some workarounds.

Recommendations and Alternatives

You might want to consider H12SSL-i if you do not need 10G support on the mainboard. If you want to use ESXi and need RAID support for local storage, H12SSL-C or (CT with 10G ports) might be a better choice.

It was difficult for me to find ASRock mainboards, that is why I did not consider them.

When you need more cores, EPYC 7443P (24-core) is priced OK at the moment (~1.5x of 7313P), whereas 7543P (32-core) is too expensive (almost 3x of 7313P).

Performance Tests

After the build is completed, I run a few performance tests before installing the virtualization solution.

The PassMark score is as expected:

I also run STREAM benchmark and the best result I could get is around 110 GB/s. This was without any particular optimization so I think this result can be improved.

Supermicro BMC Fan Control

Supermicro fan control is very basic, there are only 4 fan modes (optimal, standard, full, heavy I/O) to choose. I use optimal speed (which I think starts the fans from 30% speed) but it is important to set the low and high ranges of the fans, this can be done by ipmitool.

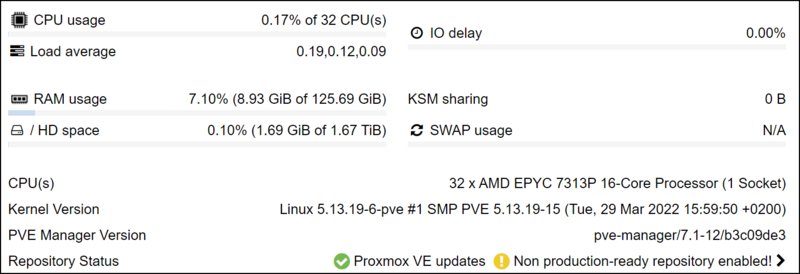

Virtualization: Proxmox VE

I have been using ESXi for 10+ years, and actually this was what I had in my mind when I started building this server. Just for curiosity, I searched for alternatives. I know about Hyper-V but I would prefer using ESXi. Then, I saw Proxmox VE and decided to give it a try. Being a Linux user for many years, I really like it, more than ESXi. It is simpler to use and understand than ESXi, and it supports ZFS so I can run it purely on local storage (2x M.2) without hardware support. It also supports installing containers in addition to virtual machines. So I decided to keep it for the virtualization solution.

Proxmox VE Summary

NUMA Topology

Because EPYC is a multi-chip module (in the sense there are actually 4 chips plus an I/O block interconnected on a single IC), the L3 cache and I/O resources are distributed in a certain way among these chips. It is possible to make a single socket EPYC system, like the one built here, declare itself as having multi NUMA nodes. This is done by NUMA Nodes per Socket (NPS) and ACPI SRAT L3 Cache as NUMA Domain BIOS settings. It is a big topic to discuss which is better, which is better for Proxmox or KVM, and does it really make any difference for your setup, but AMD recommends (source: AMD Tuning Guide AMD EPYC 7003: Workload), for KVM, to set NPS=4 and enable ACPI SRAT L3 Cache as NUMA Domain, so I keep it like that at the moment. I recommend to check out various AMD documents if you need more information.

Conclusion

I write this conclusion after 2 weeks or so. I am pretty happy with the setup until now. It is not a surprise but the capacity and the capability of the system is very good. I think it will take a very long time until I need another server at home.

Virtualizing pfSense and a Public Speedtest Server

(Update on 2022-11-21)

I decided to virtualize my physical pfSense firewall and consolidate it to this server. I installed an Intel X710-BM2 and an Intel X540-T2 network adapter and assigned (PCIe passthrough) them to pfSense. I also installed a public Speedtest server. Because of the NICs, I decided to add another 140mm fan to the system and connected it to FANB header that is controlled by the system temperature not cpu.

Current Setup with 2 NICs

(Update on 2023-02-01)

I decided to use only one NIC to free PCIe slot, mainly to decrease energy use. So I kept only X540-T2, and removed X710-BM2.

Energy Consumption

(Update on 2022-11-22)

You might be wondering the energy consumption of this server particularly with the increasing energy prices in Europe.

I did a primitive test just to give an idea. I have the server as described above and with 2 NICs, Intel X710 and Intel X540. Previously in another post, I saw the CPU was reporting minimum around 55W, and considering the mainboard, memory, and NICs, with almost no load (with some VMs running but no load, all below < 5%), I see around 110Ws consumption. I read the energy use from myStrom smart plug (average energy consumed per second, Ws field in report API call).

In order to test the maximum consumption, I created a VM with 32 cores and 120GB memory, then I run stress -c 32 -m 32 --vm-bytes 2048M. This increased the consumption (and stayed there consistently) to 226Ws. Default TDP of EPYC 7313P is 155W, so I think this is a reasonable result. Basically the CPU runs roughly between 50W and 150W. At full load, CPU temperature also reached to 60C before fan speed slightly increased (I stopped the test there, not sure at what temperature fan control tries to keep it).

With the increased electricity prices in Switzerland, starting from 2023, and I hope I calculate this correct, this means it will cost approx. 4 CHF per week (1 CHF is approx. 1 EUR), and approx. two times more at full load. That means it will cost at least 200 CHF per year.

Reflections

(Update on 2022-12-04)

I would like to evaluate my decision on building this server for home use. Not in a particular order:

Positive remarks:

stability: the server is running 24/7 and it is rock solid, I have never experienced any issue.

noise level: the server is very silent in normal use, it is almost dead silent even in night.

Supermicro BMC fan control: I was not sure if Supermicro fan control would work fine for me, because it does run the fans at a certain speed even with a light load. At least with Noctua fans, this turned out to be OK. I only set the minimum/maximum RPMs of the fans using IPMI.

Proxmox VE: very happy with Proxmox VE, I definitely recommend it.

remote management: Supermicro remote management/BMC is OK. However, I should say it is not like Dell iDRAC that I am familiar with. I actually only need to access the console as the server does not have a screen/keyboard etc., so for this purpose it does the job.

Neutral or slightly negative ones:

power effiency: this is both related to the size of the system and because it is built from enterprise/server grade components. Naturally it consumes more energy than a smaller system, and probably consumes more than a similar consumer system at same performance level. The issue is that it is difficult to scale up and down easily without changing parts (using less cores, less memory etc.). So it cannot run at NUC energy levels when there is very little load and full energy at high load. So I should say this system (at this spec) is not very good if you plan to run a very light load. On the other hand, because I also virtualized pfSense, I do not need to keep another device/server running, so effectively I do not think the energy use has an important effect for my use case.

server spec: the server is (still) over-spec for my needs at the moment, but I can do anything easily, so I like this flexibility, however it comes with limited power efficiency or higher power use. To decrease both the initial cost and ongoing energy use, it might be a good idea to consider a slighly lower spec system. However, when upgrading, the used parts will be sold at second hand market and this will cause a higher total cost of ownership. This is a difficult problem to solve for home use.

I had a concern about the temperature of 10G network IC initially. I had no issues, but since the server has a lot of PCIe bandwidth, now I would have preferred the same mainboard without onboard 10G.

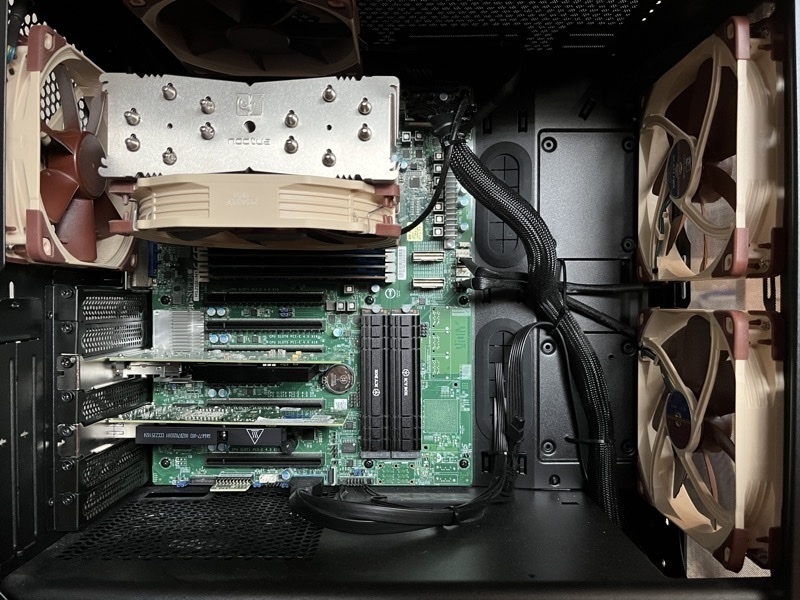

NAS and Tape Backup

(update on 2023-02-10)

I decided to move my QNAP NAS to this server. I bought additional WD HC550 18TB disks and a new case (Fractal Define R5) that can accomodate 8x 3.5" HDDs. I was planning to use the onboard SATA ports (8x) however it did not work out well to passthrough these to the VM, it was sometimes giving errors or warnings, I think, not sure, it is because AMD Milan SATA controller is not fully supported on TrueNAS Core which is FreeBSD based. So I purchased an LSI 9207i HBA, assigned it to the VM and connected the disks to this. I installed TrueNAS Core as VM and it works seamlessly.

In addition to this, I also decided to try a tape drive for backup. I decided on LTO-5, because the drives and the cartridges are not expensive and 1.5TB uncompressed capacity is acceptable for me. I purchased a Quantum LTO-5 drive and an LSI 9207e HBA and assigned it to a Linux VM that I will use solely with the tape drive.

Update on 2023-05-30

Because I have virtualized pfSense, I was using an Intel X540-T2 with PCI passthrough. As I am not using the full 10G bandwidth most of the time, I decided to remove the physical NIC and use the virtual NICs from Proxmox VE.

Update on 2023-11-29

It has been more than 18 months since I have built this server. It has been serving quite well to all my needs. Comparing to the last update I have the following changes:

- I decided to use the full network bandwidth, so I put back the Intel X540-T2 NIC and assigned it to pfSense.

- I also installed another 10G NIC for TrueNAS Core, so I am accesing the NAS through a physical adapter. It is quite easy to reach over 500MB/s and in certain cases it can get close to 1GB/s. For a very high throughput like 1G/s, there has to be multi-connections.

- I also installed an enterprise PCIe SSD (Intel) and using it as cache in TrueNAS Core. I did not particularly need this but I had the SSD so why not to use it.

- I also installed a USB 3.0 PCIe controller, and using it with a Windows Host for USB tests.

I still think the same as I wrote Reflections (above) a year ago. Because I moved the NAS here, and TrueNAS Core uses ZFS, I assigned quite a large portion of RAM to TrueNAS Core. One reflection I can write is I wish I had 256 GB RAM or I had installed 128 GB to 4 slots so I could upgrade this easily.

Update on 2024-06-14

EPYC Milan is very powerful for light loads that usually runs on a home server. That is also my case and although the VMs hardly use more than 20% of CPU, I was getting close to the memory limit. KSM (Kernel Samepage Merging) is working fine but still I was thinking to upgrade the RAMs for some time, however and unfortunately the memory modules are still quite expensive. I found a 2nd hand offer for 256GB memory (8x SK hynix 32GB) for less than half of what I paid for new 128GB so I purchased these and installed.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.